M1 LM Studio를 위한 Command Line Tool lms 설치

설치방법

https://github.com/lmstudio-ai/lms

GitHub - lmstudio-ai/lms: LM Studio in your terminal

LM Studio in your terminal. Contribute to lmstudio-ai/lms development by creating an account on GitHub.

github.com

셜치시 가장먼저 현재 설치되어있는 버번을 확인하라고합니다.

lms ships with LM Studio 0.2.22 and newer.

현재 설치되어있는 버전 확인

버전이 맞으니 설치 시작

Linux/macOS:

~/.cache/lm-studio/bin/lms bootstrap

부트스트래핑(bootstrap)이 성공했는지 확인하려면 👉 새 터미널 창 👈에서 다음을 실행하세요.

lms

끝? 새로운 터미널을 열고 실행해보겠습니다.

subcommand를 이용해서 여러작업들이 가능합니다.

사용방법

lms --help를 사용하면 사용 가능한 모든 하위 명령 목록을 볼 수 있습니다.

각 하위 명령에 대한 자세한 내용을 보려면 lms <subcommand> --help를 실행하세요.

자주 사용되는 명령은 다음과 같습니다.

- lms status - LM Studio의 상태를 확인합니다.

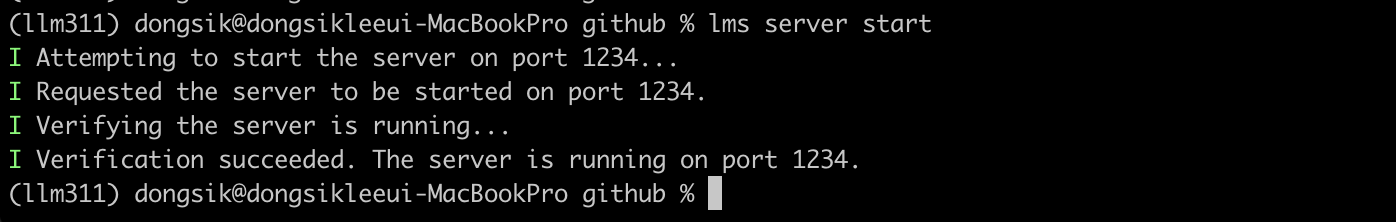

- lms sever start - 로컬 API 서버를 시작합니다.

- lms server stop - 로컬 API 서버를 중지합니다.

- lms ls - 다운로드한 모든 모델을 나열합니다.

- lms ls --detailed - 다운로드한 모든 모델을 자세한 정보와 함께 나열합니다.

- lms ls --json - 다운로드한 모든 모델을 기계가 읽을 수 있는 JSON 형식으로 나열합니다.

- lms ps - 추론에 사용할 수 있는 로드된 모든 모델을 나열합니다.

- lms ps --json - 기계가 읽을 수 있는 JSON 형식으로 추론에 사용할 수 있는 로드된 모든 모델을 나열합니다.

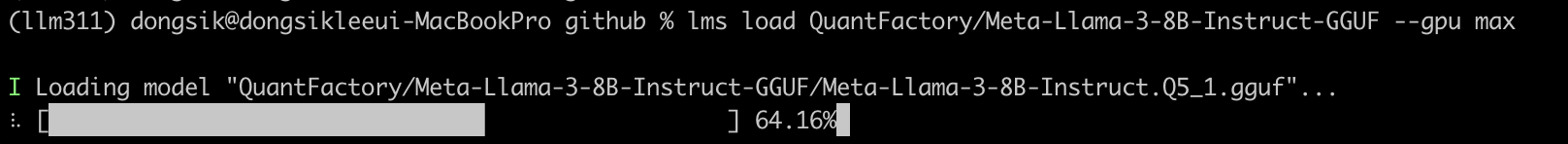

- lms load --gpu max - 최대 GPU 가속으로 모델을 로드합니다.

- lms load <모델 경로> --gpu max -y - 확인 없이 최대 GPU 가속으로 모델을 로드합니다.

- lms unload <모델 식별자> - 모델을 언로드하려면

- lms unload --all - 모든 모델을 언로드합니다.

- lms create - LM Studio SDK를 사용하여 새 프로젝트를 생성하려면

- lms log stream - LM Studio에서 로그를 스트리밍하려면

server 테스트

https://lmstudio.ai/docs/local-server

Local LLM Server | LM Studio

You can use LLMs you load within LM Studio via an API server running on localhost.

lmstudio.ai

Supported endpoints

GET /v1/models

POST /v1/chat/completions

POST /v1/embeddings

POST /v1/completions

/v1/models

curl http://localhost:1234/v1/models% curl http://localhost:1234/v1/models

{

"data": [

{

"id": "QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf",

"object": "model",

"owned_by": "organization-owner",

"permission": [

{}

]

}

],

"object": "list"

}%

%

/v1/chat/completions

stream false 모드로 조회

% curl http://localhost:1234/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"messages": [

{ "role": "system", "content": "You are a helpful coding assistant." },

{ "role": "user", "content": "대한민국의 수도는 어디야?" }

],

"temperature": 0.7,

"max_tokens": -1,

"stream": false

}'

{

"id": "chatcmpl-5ygnkzjvs951v7zn0etpir",

"object": "chat.completion",

"created": 1714806586,

"model": "QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "😊\n\nThe capital of South Korea (대한민국) is Seoul (서울)! 🏙️"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 22,

"completion_tokens": 18,

"total_tokens": 40

}

}%

%stream true 모드로 조회

% curl http://localhost:1234/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"messages": [

{ "role": "system", "content": "You are a helpful coding assistant." },

{ "role": "user", "content": "대한민국의 수도는 어디야?" }

],

"temperature": 0.7,

"max_tokens": -1,

"stream": true

}'

data: {"id":"","object":"chat.completion.chunk","created":0,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":"<|start_header_id|>system<|end_header_id|>\n\nYou are a helpful coding assistant.<|eot_id|><|start_header_id|>user<|end_header_id|>\n\n대한민국의 수도는 어디야?<|eot_id|><|start_header_id|>assistant<|end_header_id|>\n\n"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":"😊"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":"\n\n"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":"The"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":" capital"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":" of"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":" South"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":" Korea"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":" ("},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":"대한"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":"민국"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":")"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":" is"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":" Seoul"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":" ("},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":"서울"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":")!"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":" 🏙"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{"role":"assistant","content":"️"},"finish_reason":null}]}

data: {"id":"chatcmpl-kri5mxmwvfmra6jmyhgzxj","object":"chat.completion.chunk","created":1714806499,"model":"QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf","choices":[{"index":0,"delta":{},"finish_reason":"stop"}]}

data: [DONE]%

%

/v1/embeddings

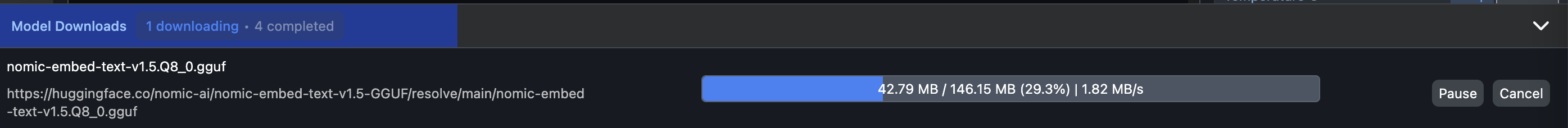

embedding 을위해서 모델을 받아야합니다. lms load로 모델을 받지는 못하네요. LM Studio로 가서 모델을 받습니다.

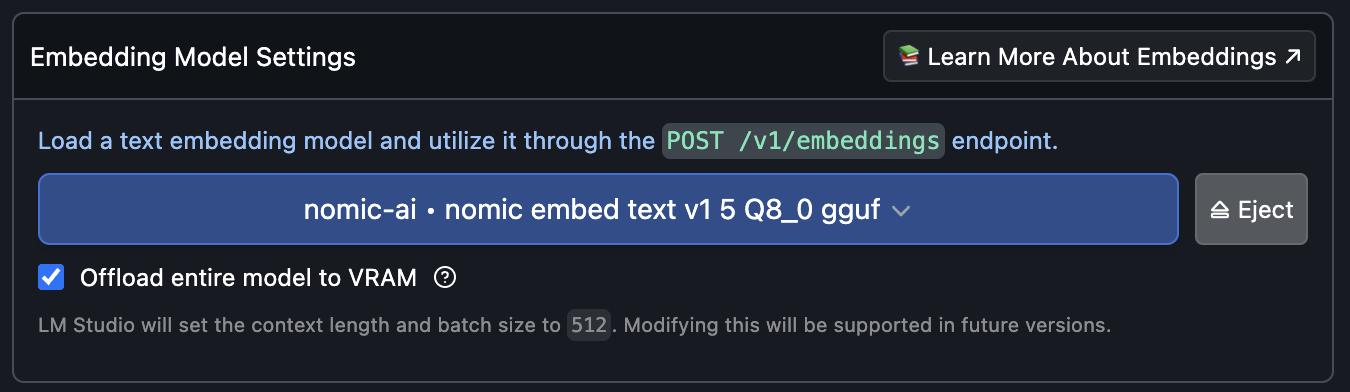

그리고 모델을 로딩해야 cli에서 사용가능합니다.

Download를 눌러 받아주면 됩니다.

다운로드가 완료

다운로드가 완료되면 모델을 로딩하면 embedding을 사용할수있습니다.

lms ls로 모델들을 확인합니다.

% lms ls

You have 4 models, taking up 19.60 GB of disk space.

LLMs (Large Language Models) SIZE ARCHITECTURE

QuantFactory/Meta-Llama-3-8B-Instruct-GGUF 6.07 GB Llama ✓ LOADED

heegyu/EEVE-Korean-Instruct-10.8B-v1.0-GGUF 7.65 GB Llama

lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF 5.73 GB Llama

Embedding Models SIZE ARCHITECTURE

nomic-ai/nomic-embed-text-v1.5-GGUF 146.15 MB Nomic BERT

%

로드된 모델을 확인해봅니다.

% curl http://localhost:1234/v1/models

{

"data": [

{

"id": "QuantFactory/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct.Q5_1.gguf",

"object": "model",

"owned_by": "organization-owner",

"permission": [

{}

]

},

{

"id": "nomic-ai/nomic-embed-text-v1.5-GGUF/nomic-embed-text-v1.5.Q8_0.gguf",

"object": "model",

"owned_by": "organization-owner",

"permission": [

{}

]

}

],

"object": "list"

}%

(llm311) dongsik@dongsikleeui-MacBookPro github %

이제 embedding을 실행해봅니다.

% curl http://localhost:1234/v1/embeddings \

-H "Content-Type: application/json" \

-d '{

"input": "대한민국의 수도는 어디야?",

"model": "nomic-ai/nomic-embed-text-v1.5-GGUF"

}'

% curl http://localhost:1234/v1/embeddings \

-H "Content-Type: application/json" \

-d '{

"input": "대한민국의 수도는 어디야?",

"model": "nomic-ai/nomic-embed-text-v1.5-GGUF"

}'

{

"object": "list",

"data": [

{

"object": "embedding",

"embedding": [

-0.01345420628786087,

0.011493690311908722,

-0.17894381284713745,

... <생략>

-0.009369068779051304,

-0.013656018301844597,

0.01120726577937603,

-0.01044483669102192,

-0.008488642983138561,

-0.040133628994226456,

-0.03181423991918564

],

"index": 0

}

],

"model": "nomic-ai/nomic-embed-text-v1.5-GGUF/nomic-embed-text-v1.5.Q8_0.gguf",

"usage": {

"prompt_tokens": 0,

"total_tokens": 0

}

}%

(llm311) dongsik@dongsikleeui-MacBookPro github %