M1 Ollama로 heegyu/EEVE-Korean-Instruct-10.8B-v1.0-GGUF 양자화 모델 테스트

테디님이 올려놓으신 유튜브 영상을 보고 Macbook M1에서 따라해봤습니다. 중간에 몇가지 설정에 맞게 약간 변경해가면서 테스트 합니다.

https://www.youtube.com/watch?v=VkcaigvTrug&t=23s

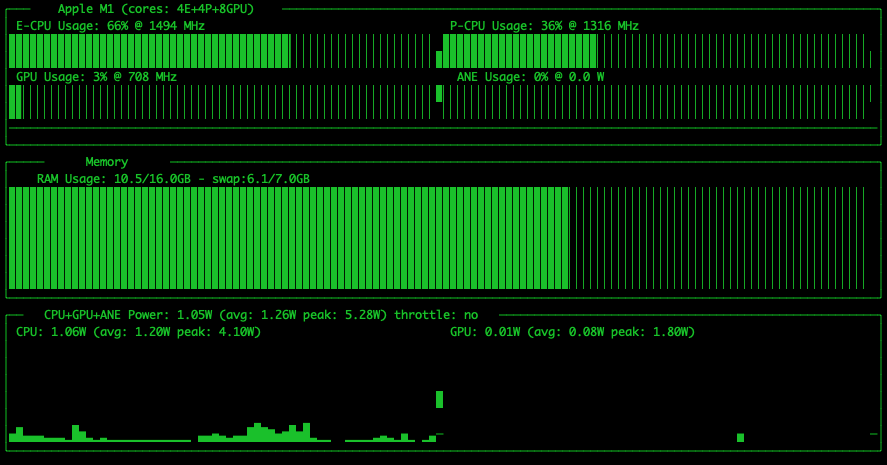

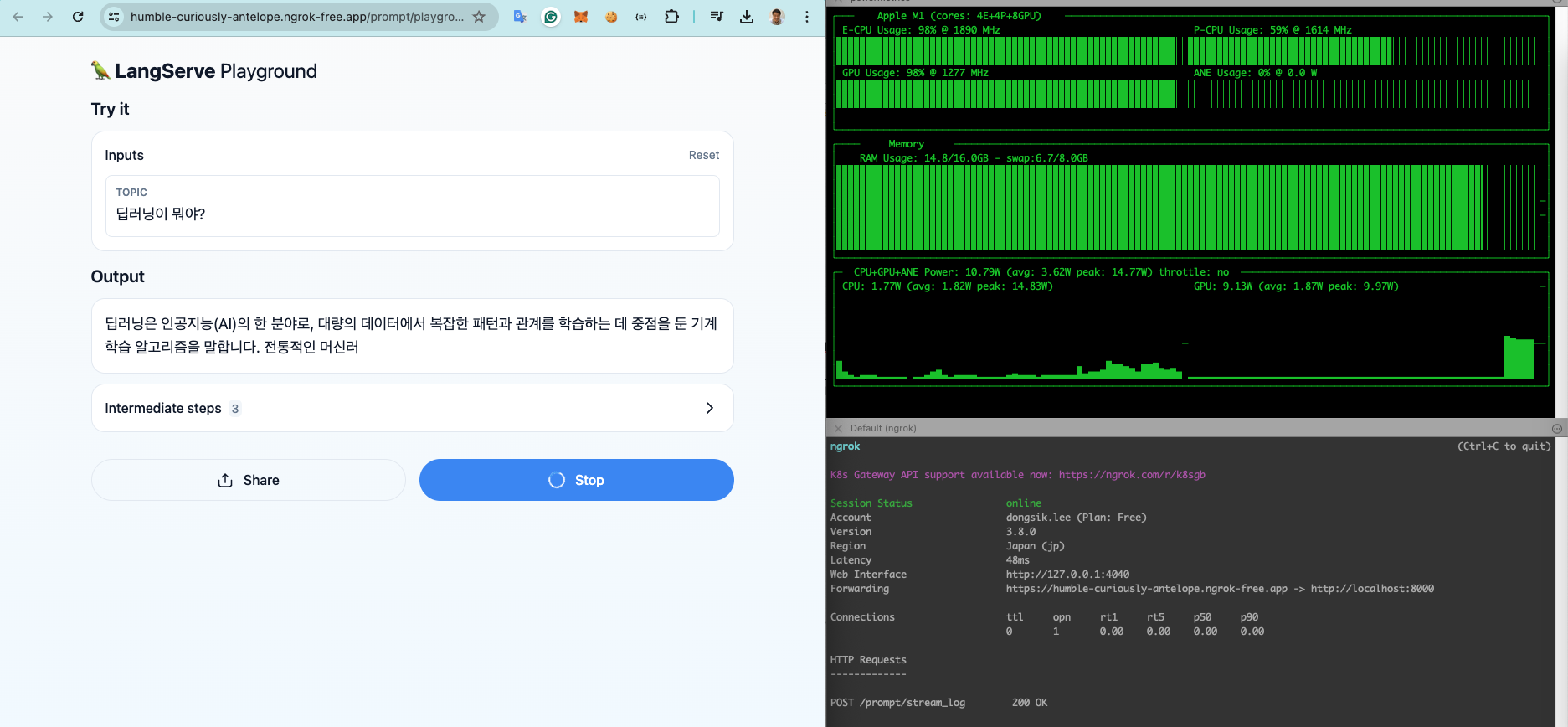

# GPU 모니터링

% sudo asitop

# LangServe 실행

% python server.py

# ngrok으로 external 서비스

% ngrok http --domain=humble-curiously-antelope.ngrok-free.app 8000

# PDF를 RAG

% streamlit run main.pyconda 가상환경 만들고 requirements.txt로 필요한 모듈 설치

conda create -n llm311 python=3.11

% conda env list

# conda environments:

#

base * /Users/dongsik/miniconda

llm /Users/dongsik/miniconda/envs/llm

llm311 /Users/dongsik/miniconda/envs/llm311

% conda activate llm311

% python -V

Python 3.11.9

% pip list

Package Version

---------- -------

pip 23.3.1

setuptools 68.2.2

wheel 0.41.2

예제 github을 내 github으로 fork 한후 내 PC에 clone 받아서 내환경에 맞게 수정하면서 진행합니다.

teddy github : https://github.com/teddylee777/langserve_ollama

내 github : https://github.com/dongshik/langserve_ollama

% ll

total 1000

drwxr-xr-x@ 12 dongsik staff 384 Apr 20 09:22 .

drwxr-xr-x 4 dongsik staff 128 Apr 19 16:35 ..

drwxr-xr-x@ 14 dongsik staff 448 Apr 19 16:40 .git

-rw-r--r--@ 1 dongsik staff 50 Apr 19 16:35 .gitignore

-rw-r--r--@ 1 dongsik staff 3343 Apr 19 16:35 README.md

drwxr-xr-x@ 8 dongsik staff 256 Apr 19 16:35 app

drwxr-xr-x@ 8 dongsik staff 256 Apr 19 16:35 example

drwxr-xr-x@ 3 dongsik staff 96 Apr 19 16:35 images

drwxr-xr-x@ 4 dongsik staff 128 Apr 19 16:35 ollama-modelfile

-rw-r--r--@ 1 dongsik staff 481043 Apr 19 16:35 poetry.lock

-rw-r--r--@ 1 dongsik staff 659 Apr 19 16:35 pyproject.toml

-rw-r--r--@ 1 dongsik staff 14983 Apr 19 16:35 requirements.txt

pip install -r requirements.txt

% pip install -r requirements.txt

Ignoring colorama: markers 'python_version >= "3.11.dev0" and python_version < "3.12.dev0" and platform_system == "Windows"' don't match your environment

% pip list | grep lang

langchain 0.1.16

langchain-community 0.0.32

langchain-core 0.1.42

langchain-openai 0.1.3

langchain-text-splitters 0.0.1

langchainhub 0.1.15

langdetect 1.0.9

langserve 0.0.51

langsmith 0.1.47

% pip list | grep huggingface

huggingface-hub 0.22.2

Huggingface에서 모델 Download 받고 Ollama에 EEVE Q5 모델 등록하고 구동

huggingface-cli download \

heegyu/EEVE-Korean-Instruct-10.8B-v1.0-GGUF \

ggml-model-Q5_K_M.gguf \

--local-dir /Users/dongsik/GitHub/teddylee777/langserve_ollama/ollama-modelfile/EEVE-Korean-Instruct-10.8B-v1.0 \

--local-dir-use-symlinks False

Consider using `hf_transfer` for faster downloads. This solution comes with some limitations. See https://huggingface.co/docs/huggingface_hub/hf_transfer for more details.

downloading https://huggingface.co/heegyu/EEVE-Korean-Instruct-10.8B-v1.0-GGUF/resolve/main/ggml-model-Q5_K_M.gguf to /Users/dongsik/.cache/huggingface/hub/tmpkuuur4ki

ggml-model-Q5_K_M.gguf: 37%|███████████████████████████▌ | 2.81G/7.65G [04:35<09:55, 8.13MB/s]

% ll -sh

total 14954512

0 drwxr-xr-x@ 5 dongsik staff 160B Apr 20 10:02 .

0 drwxr-xr-x@ 4 dongsik staff 128B Apr 20 10:02 ..

8 -rw-r--r--@ 1 dongsik staff 369B Apr 19 16:35 Modelfile

8 -rw-r--r--@ 1 dongsik staff 419B Apr 19 16:35 Modelfile-V02

14954496 -rw-r--r-- 1 dongsik staff 7.1G Apr 20 10:02 ggml-model-Q5_K_M.gguf

<경로>/langserve_ollama/ollama-modelfile/EEVE-Korean-Instruct-10.8B-v1.0/Modelfile

FROM ggml-model-Q5_K_M.gguf

TEMPLATE """{{- if .System }}

<s>{{ .System }}</s>

{{- end }}

<s>Human:

{{ .Prompt }}</s>

<s>Assistant:

"""

SYSTEM """A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions."""

PARAMETER TEMPERATURE 0

PARAMETER stop <s>

PARAMETER stop </s>

모델파일 설정하지 않으면 답변이 끝났을때 이상하게 대답할수도있기때문에 필요합니다.

System prompt가 있다면 중간(.System) 위치에 넣어으라는 의미이며 여기서는 'SYSTEM'이 이자리를 치환하게 됩니다.

그다음 <s> 스페셜 토큰이 앞에 붙어서 사용자 즉 Human의 질문 .Prompt가 들어가게 됩니다.

그후 모델 Assistant가 받아서 답변하게 됩니다.

※ Note!!

Modelfile에서 <s>는 문장의 시작을 나타내는 특수 토큰입니다. 이것은 "문장의 시작"을 나타내기 위해 사용됩니다. 예를 들어, 자연어 처리 작업에서 모델이 문장의 시작을 식별하고, 이에 따라 적절한 처리를 수행할 수 있도록 합니다. 이것은 토큰화된 데이터의 일부로서 모델에 제공됩니다.

tokenizer.chat_template

| {% if messages[0]['role'] == 'system' %}{% set loop_messages = messages[1:] %}{% set system_message = messages[0]['content'] %}{% else %}{% set loop_messages = messages %}{% set system_message = 'You are a helpful assistant.' %}{% endif %}{% if not add_generation_prompt is defined %}{% set add_generation_prompt = false %}{% endif %}{% for message in loop_messages %}{% if loop.index0 == 0 %}{{'<|im_start|>system ' + system_message + '<|im_end|> '}}{% endif %}{{'<|im_start|>' + message['role'] + ' ' + message['content'] + '<|im_end|>' + ' '}}{% endfor %}{% if add_generation_prompt %}{{ '<|im_start|>assistant ' }}{% endif %} |

https://huggingface.co/yanolja/EEVE-Korean-Instruct-10.8B-v1.0

Prompt Template

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions.

Human: {prompt}

Assistant:

ollama 목록 확인

% ollama list

NAME ID SIZE MODIFIED

eeve:q4 68f4c2c2d9fe 6.5 GB 7 days ago

gemma:2b b50d6c999e59 1.7 GB 10 days ago

ollama가 잘 구동되어 있는지 확인합니다.

% ps -ef | grep ollama

501 3715 3691 0 Wed01PM ?? 0:29.51 /Applications/Ollama.app/Contents/Resources/ollama serve

501 4430 1 0 Wed01PM ?? 0:00.03 /Applications/Ollama.app/Contents/Frameworks/Squirrel.framework/Resources/ShipIt com.electron.ollama.ShipIt /Users/dongsik/Library/Caches/com.electron.ollama.ShipIt/ShipItState.plist

501 61608 3197 0 10:45AM ttys002 0:00.01 grep ollama

새로받은 모델을 ollama에 등록합니다

ollama create eeve:q5 -f ollama-modelfile/EEVE-Korean-Instruct-10.8B-v1.0/Modelfile

저는 위의 Modelfile로 ollama등록할려고 하니 "Error: unknown parameter 'TEMPERATURE'"가 발생했습니다.

그래서 소문자 temperature로 변경해서 생성되었습니다.

만일 동일한 에러가 발생한다면 소문자 temperature로 변경해서 생성해보시기 바랍니다.

% ollama create eeve:q5 -f ollama-modelfile/EEVE-Korean-Instruct-10.8B-v1.0/Modelfile

transferring model data

creating model layer

creating template layer

creating system layer

creating parameters layer

creating config layer

using already created layer sha256:b9e3d1ad5e8aa6db09610d4051820f06a5257b7d7f0b06c00630e376abcfa4c1

writing layer sha256:6b70a2ad0d545ca50d11b293ba6f6355eff16363425c8b163289014cf19311fc

writing layer sha256:1fa69e2371b762d1882b0bd98d284f312a36c27add732016e12e52586f98a9f5

writing layer sha256:3ab8c1bbd3cd85e1b39b09f5ff9a76e64da20ef81c22ec0937cc2e7076f1a81c

writing layer sha256:d86595b443c06710a3e5ba27700c6a93ded80100ff1aa808a7f3444ff529fa70

writing manifest

success

% ollama list

NAME ID SIZE MODIFIED

eeve:q4 68f4c2c2d9fe 6.5 GB 7 days ago

eeve:q5 0732d4a47219 7.7 GB 7 minutes ago

gemma:2b b50d6c999e59 1.7 GB 10 days ago

ollama run eeve:q5

% ollama run eeve:q5

>>> 대한민국의 수도는 어디야?

안녕하세요! 대한민국의 수도에 대해 궁금해하시는군요. 서울이 바로 그 곳입니다! 서울은 나라의 북부에 위치해 있으며 정치, 경제, 문화의 중심지 역할을 하고 있습니다. 2019년 기준으로 약 970만 명의 인구를 가진 대도시로,

세계에서 가장 큰 도시 중 하나입니다. 또한 세계적인 금융 허브이자 주요 관광지로, 경복궁, 남산타워, 명동과 같은 다양한 역사적 및 현대적 명소를 자랑하고 있습니다. 서울은 활기찬 밤문화로도 유명하며, 많은 바와 클럽

관광객과 현지인 모두를 끌어들입니다. 대한민국의 수도에 대해 더 알고 싶으신 것이 있으신가요?

>>>

아래 문구로 질문해보겠습니다.

한국의 수도는 어디인가요? 아래 선택지 중 골라주세요.\n\n(A) 경성\n(B) 부산\n(C) 평양\n(D) 서울\n(E) 전주

>>> 한국의 수도는 어디인가요? 아래 선택지 중 골라주세요.\n\n(A) 경성\n(B) 부산\n(C) 평양\n(D) 서울\n(E) 전주

대한민국의 수도에 대한 질문에 답변해 주셔서 감사합니다! 정답은 (D) 서울입니다. 서울은 나라의 북부에 위치해 있으며 정치, 경제, 문화의 중심지 역할을 하고 있습니다. 2019년 기준으로 약 970만 명의 인구를

대도시로, 세계에서 가장 큰 도시 중 하나입니다. 또한 세계적인 금융 허브이자 주요 관광지로, 경복궁, 남산타워, 명동과 같은 다양한 역사적 및 현대적 명소를 자랑하고 있습니다. 서울은 활기찬 밤문화로도 유명하며,

많은 바와 클럽이 관광객과 현지인 모두를 끌어들입니다. 대한민국의 수도에 대해 더 알고 싶으신 것이 있으신가요?

질문과 동시에 답변이 나오는것처럼 작동합니다. 속도도 좋고 답변의 퀄리티도 좋습니다.

>>> 다음 지문을 읽고 문제에 답하시오.

...

... ---

...

... 1950년 7월, 한국 전쟁 초기에 이승만 대통령은 맥아더 장군에게 유격대원들을 북한군의 후방에 침투시키는 방안을 제안했다. 이후, 육군본부는 육본직할 유격대와 육본 독립 유격대를 편성했다. 국군은 포항과 인접한 장사동 지역에 상륙작

... 전을 수행할 부대로 독립 제1유격대대를 선정했다. 육군본부는 독립 제1유격대대에 동해안의 장사동 해안에 상륙작전을 감행하여 북한군 제2군단의 보급로를 차단하고 국군 제1군단의 작전을 유리하게 하기 위한 작전명령(육본 작명 제174호)

... 을 하달했다. 9월 14일, 독립 제1유격대대는 부산에서 LST 문산호에 승선하여 영덕군의 장사동으로 출항했다.

...

... 1950년 9월 15일, 독립 제1유격대대는 장사동 해안에 상륙을 시도하였으나 태풍 케지아로 인한 높은 파도와 안개로 인해 어려움을 겪었다. LST 문산호는 북한군의 사격과 파도로 인해 좌초되었고, 상륙부대는 09:00시경에 전원이

... 상륙을 완료하였다. 그 후, 15:00시경에 200고지를 점령하였고, 다양한 무기와 장비를 노획하였다. 9월 16일과 17일에는 독립 제1유격대대가 여러 위치에서 북한군과의 전투를 벌였으며, 미 구축함과의 연락 두절로 인해 추가적인

... 어려움을 겪었다.

...

... 장사동에서 위급한 상황에 처한 독립 제1유격대대를 구출하기 위해 해군본부는 LT-1(인왕호)를 급파했으나, LST 문산호의 구출에 실패했다. 해군본부는 상륙부대의 철수를 지원하기 위해 LST 조치원호를 현지로 보냈다. 9월 18일,

... 이명흠 부대장은 유엔 해군과의 협력 하에 부족한 식량과 탄약 지원을 받았다. 9월 19일, 유엔군의 함포지원과 함께 LST 조치원호가 도착하여 철수 작전을 시작했다. 스피어 소령은 직접 해안에 상륙하여 구조작전을 지시하였다. 9월 2

... 0일, 725명이 부산항으로 복귀했으나, 32명이 장사동 해안에 남아 북한군의 포로가 되었거나 탈출하여 국군에 합류하였다.

...

... 장사리 전투가 인천 상륙작전의 양동작전으로 알려졌으나, 이 전투가 드라마틱한 요소로 인해 과장되었으며, 실제로는 인천 상륙작전과 큰 관련이 없다. 또한, 북한이나 중국의 군사적 상황을 고려할 때, 장사리에서의 전투가 낙동강 전선에 영

... 향을 끼칠 가능성은 낮다.

...

... ---

...

... 문제

... 1. 지문에 나오는 지명을 모두 쓰시오.

... 2. 그중 대게로 유명한 곳은?

지문에 나오는 지명은 다음과 같습니다:

- 포항

- 장사동

- 영덕군

- 부산

- 문산호

- 조치원호

- 스피어 소령

- 낙동강 전선

대게로 유명한 곳은 영덕군입니다.

ollama 쉘에서 나올때는 Use Ctrl + d or /bye to exit.

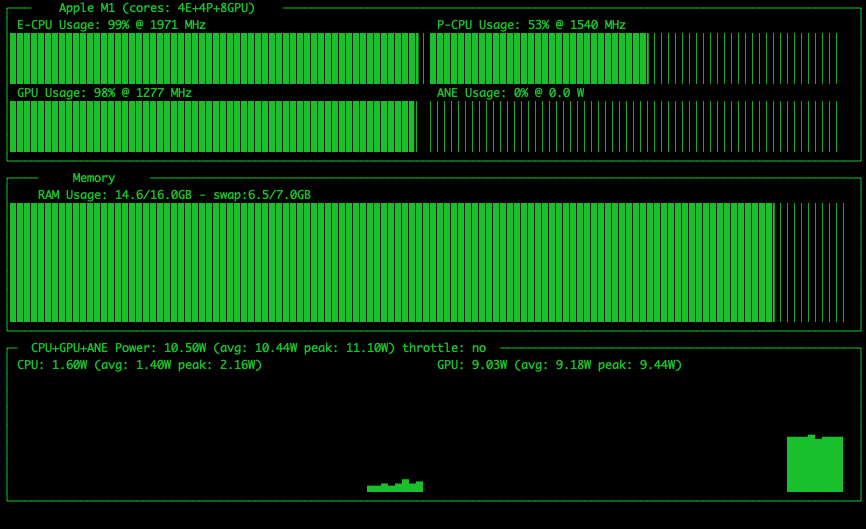

2021년형 14인치 MacBook Pro의 M1 Pro CPU는 10코어이고 GPU는 16코어입니다.

2020년형 13인치 MacBook Pro의 M1 CPU는 8코어이고 GPU는 8코어입니다. (저는 이겁니다)

cpu를 100% 까지 사용하면서 일했습니다. (수고했어)

LangServe로 모델 서빙

langserve_ollama % ll app

total 40

drwxr-xr-x@ 8 dongsik staff 256 Apr 19 16:35 .

drwxr-xr-x@ 12 dongsik staff 384 Apr 20 09:22 ..

-rw-r--r--@ 1 dongsik staff 0 Apr 19 16:35 __init__.py

-rw-r--r--@ 1 dongsik staff 549 Apr 19 16:35 chain.py

-rw-r--r--@ 1 dongsik staff 723 Apr 19 16:35 chat.py

-rw-r--r--@ 1 dongsik staff 328 Apr 19 16:35 llm.py

-rw-r--r--@ 1 dongsik staff 1444 Apr 19 16:35 server.py

-rw-r--r--@ 1 dongsik staff 559 Apr 19 16:35 translator.py

(llm311) dongsik@dongsikleeui-MacBookPro langserve_ollama %

chat.py, chain.py, llm.py, translator.py 세개 파일의 llm 모델명을 내 환경에 맞게 수정합니다.

# LangChain이 지원하는 다른 채팅 모델을 사용합니다. 여기서는 Ollama를 사용합니다.

#llm = ChatOllama(model="EEVE-Korean-10.8B:latest")

llm = ChatOllama(model="eeve:q5")

server.py 실행

(llm311) dongsik@dongsikleeui-MacBookPro langserve_ollama % cd app

(llm311) dongsik@dongsikleeui-MacBookPro app % pwd

/Users/dongsik/GitHub/teddylee777/langserve_ollama/app

(llm311) dongsik@dongsikleeui-MacBookPro app % ll

total 40

drwxr-xr-x@ 8 dongsik staff 256 Apr 19 16:35 .

drwxr-xr-x@ 12 dongsik staff 384 Apr 20 09:22 ..

-rw-r--r--@ 1 dongsik staff 0 Apr 19 16:35 __init__.py

-rw-r--r--@ 1 dongsik staff 584 Apr 20 13:15 chain.py

-rw-r--r--@ 1 dongsik staff 758 Apr 20 13:15 chat.py

-rw-r--r--@ 1 dongsik staff 363 Apr 20 13:15 llm.py

-rw-r--r--@ 1 dongsik staff 1444 Apr 19 16:35 server.py

-rw-r--r--@ 1 dongsik staff 594 Apr 20 13:15 translator.py

(llm311) dongsik@dongsikleeui-MacBookPro app % python server.py

http://0.0.0.0:8000/prompt/playground/

질문 과 답변

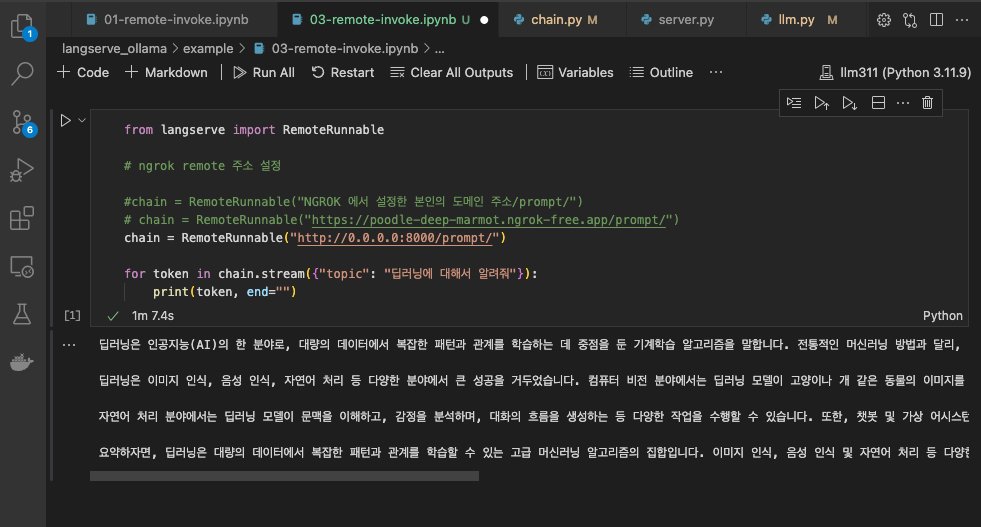

RemoteRunable로 LangServe를 호출 하도록 변경

<경로>/langserve_ollama/example

% ll

total 120

drwxr-xr-x@ 9 dongsik staff 288 Apr 20 13:50 .

drwxr-xr-x@ 12 dongsik staff 384 Apr 20 09:22 ..

drwxr-xr-x@ 3 dongsik staff 96 Apr 19 16:35 .streamlit

-rw-r--r--@ 1 dongsik staff 12504 Apr 19 16:35 00-ollama-test.ipynb

-rw-r--r--@ 1 dongsik staff 4885 Apr 19 16:35 01-remote-invoke.ipynb

-rw-r--r--@ 1 dongsik staff 3775 Apr 19 16:35 02-more-examples.ipynb

-rw-r--r--@ 1 dongsik staff 6222 Apr 19 16:35 main.py

-rw-r--r--@ 1 dongsik staff 14708 Apr 19 16:35 requirements.txt

01-remote-invoke.ipynb의 로컬 LangServe 주소로 변경합니다

from langserve import RemoteRunnable

# ngrok remote 주소 설정

#chain = RemoteRunnable("NGROK 에서 설정한 본인의 도메인 주소/prompt/")

# chain = RemoteRunnable("https://poodle-deep-marmot.ngrok-free.app/prompt/")

chain = RemoteRunnable("http://0.0.0.0:8000/prompt/")

for token in chain.stream({"topic": "딥러닝에 대해서 알려줘"}):

print(token, end="")

ngrok을 이용해서 로컬 LangServe 를 Port Forwarding하기

ngrok 가입

https://dashboard.ngrok.com/cloud-edge/domains

M1용 설치 파일을 다운로드 받아서 설치합니다.

https://dashboard.ngrok.com/get-started/setup/macos

무료 도메인 설정

humble-curiously-antelope.ngrok-free.app

LangServe 구동된 포트로 ngok 도메인 지정해서 포트 포워딩

ngrok http --domain=humble-curiously-antelope.ngrok-free.app 8000

% ngrok http --domain=humble-curiously-antelope.ngrok-free.app 8000

ngrok (Ctrl+C to quit)

K8s Gateway API support available now: https://ngrok.com/r/k8sgb

Session Status online

Account dongsik.lee (Plan: Free)

Version 3.8.0

Region Japan (jp)

Latency 45ms

Web Interface http://127.0.0.1:4040

Forwarding https://humble-curiously-antelope.ngrok-free.app -> http://localhost:8000

Connections ttl opn rt1 rt5 p50 p90

0 0 0.00 0.00 0.00 0.00

https://humble-curiously-antelope.ngrok-free.app/prompt/playground/

ngrok url로 질의를 해보면 local 서버의 GPU가 100%로 올라가면서 Output을 만들고있습니다.

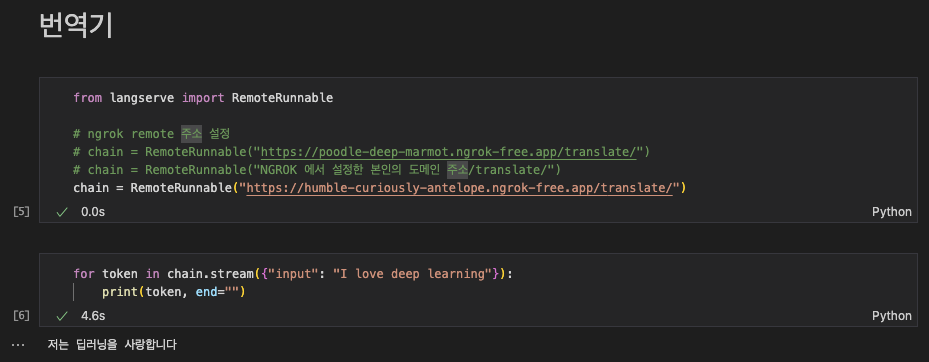

01-remote-invoke.ipynb 파일의 RemoteRunnable 주소를 ngrok 주소로 변경하고 vscode로 실행해봅니다.

from langserve import RemoteRunnable

# ngrok remote 주소 설정

#chain = RemoteRunnable("NGROK 에서 설정한 본인의 도메인 주소/prompt/")

chain = RemoteRunnable("https://humble-curiously-antelope.ngrok-free.app/prompt/")

#chain = RemoteRunnable("http://0.0.0.0:8000/prompt/")

for token in chain.stream({"topic": "딥러닝에 대해서 알려줘"}):

print(token, end="")

잘 작동됩니다.

추가 예제

번역기

from langchain_community.chat_models import ChatOllama

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

# LangChain이 지원하는 다른 채팅 모델을 사용합니다. 여기서는 Ollama를 사용합니다.

# llm = ChatOllama(model="EEVE-Korean-10.8B:latest")

llm = ChatOllama(model="eeve:q5")

# 프롬프트 설정

prompt = ChatPromptTemplate.from_template(

"Translate following sentences into Korean:\n{input}"

)

# LangChain 표현식 언어 체인 구문을 사용합니다.

chain = prompt | llm | StrOutputParser()

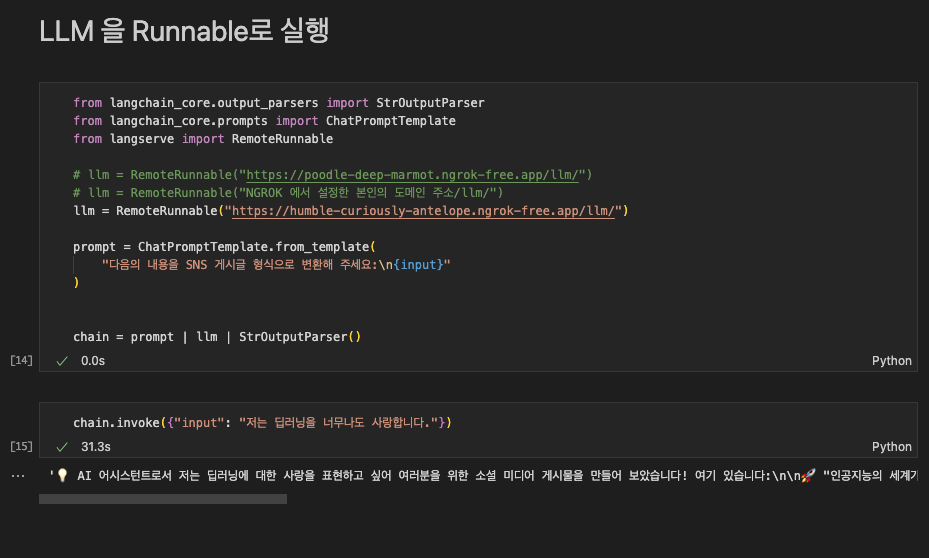

LLM을 Runable로 실행

from langchain_community.chat_models import ChatOllama

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

# LangChain이 지원하는 다른 채팅 모델을 사용합니다. 여기서는 Ollama를 사용합니다.

# llm = ChatOllama(model="EEVE-Korean-10.8B:latest")

llm = ChatOllama(model="eeve:q5")

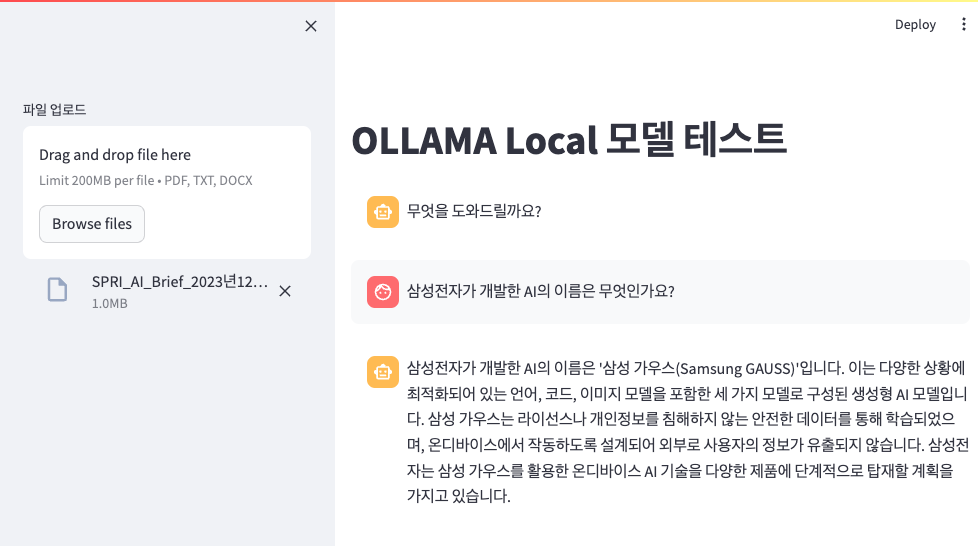

Streamlit으로 PDF rag 해보기

Embedding을 OpenAIEmbeddings을 사용하기위해서 OPENAI_API_KEY를 .env 파일에서 가져옵니다.

% pip install python-dotenv

main.py 내용중 OPEN API KEY세팅과 LANGSERVE_ENDPOINT를 ngrok주소로 업데이트 한후 실행합니다

% streamlit run main.py

You can now view your Streamlit app in your browser.

Local URL: http://localhost:8501

Network URL: http://192.168.0.10:8501

For better performance, install the Watchdog module:

$ xcode-select --install

$ pip install watchdog

예제 > SPRI_AI_Brief_2023년12월호_F.pdf

https://spri.kr/posts?code=AI-Brief

FileNotFoundError: [Errno 2] No such file or directory: 'pdfinfo'

% conda install poppler

Channels:

- defaults

- conda-forge

Platform: osx-arm64

% pip install pdftotext

FileNotFoundError: [Errno 2] No such file or directory: 'tesseract'

% brew install tesseract

==> Auto-updating Homebrew...

Adjust how often this is run with HOMEBREW_AUTO_UPDATE_SECS or disable with

HOMEBREW_NO_AUTO_UPDATE. Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

% brew install tesseract-lang

UnicodeEncodeError: 'ascii' codec can't encode characters in position 22-23: ordinal not in range(128)

위 PDF에서 최종 질문 을 해보겠습니다.

실제해보니 내용이 엄청난 영상입니다.

- Ollama

- EEVE 양자화 모델

- LangServe

- ngrok

- Streamlit RAG

- Asitop

감사합니다.

Asitop으로 내 M1 상태 모니터링

% pip install asitop

% sudo asitop

sudo 패스워드 입력